The data quality crisis in the market research industry has reached a tipping point. For years, conversations about the importance of data integrity have been circulating among professionals in the field, but there seems to be a new urgency around the issue. As the industry grapples with challenges posed by fraudulent responses, disengaged participants, an influx of bots, and AI-generated responses, the need for decisive action has become more apparent than ever before.

With the problem looming larger and no end in sight, what can your team do today to address the quality of data?

The existential threat of poor data quality

There is growing concern among market research professionals and their clients about the reliability of survey data. This concern has led some companies to question the value of conducting quantitative research altogether due to trust issues with the data. This sentiment represents a significant shift in the industry as the gravity of the data quality problem has become more apparent.

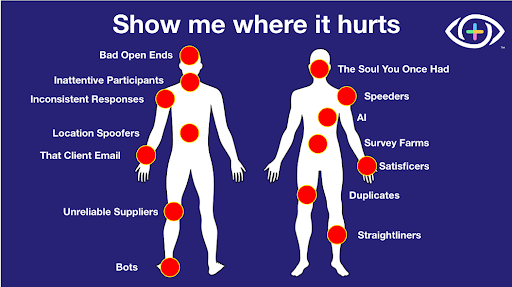

Several factors contribute to this crisis, including disengaged participants, survey farms, organized fraud, increased use of bots, and AI-generated responses. In the past, the industry has focused on treating the symptoms, weeding out the bad data afterit has been collected. This approach is unsatisfactory in today’s landscape. It’s time to treat the root causes of poor data quality.

The true cost of poor data quality

To quantify the financial impact of poor data quality, our team worked with a number of clients to estimate the costs associated with a typical study. We found that on average, a B2C study wastes around $650, while a B2B study wastes approximately $1465 due to quality issues. These costs stem from various sources, including sample reconciliations and reversals, field management, data cleaning, and refielding.

Challenges with data reliability extend beyond financial losses. Market research firms can suffer significant reputational damage when they fail to deliver reliable results to their clients. Many industry professionals have painfully experienced losing projects and clients due to data quality issues.

What can be done?

There are several ways the industry thinks about cleaning their datasets before analysis. Multiple strategies should be employed to combat data quality issues effectively.

If your organization is missing the boat on even one of these strategies, you could be putting your data at risk.

1. Employ best-in-class technology: Leverage advanced tools and data quality platforms designed to detect and prevent survey fraud rather than relying solely on in-house solutions.

(Shameless dtect plug here…our platform detects fraud before it gets in the survey)

2. Address different types of fraud comprehensively: Implement a multi-faceted approach that tackles various forms of fraud, including duplicate survey entrants, location spoofing, bots, and organized fraud schemes.

3. Be selective with supply: Carefully vet sample providers and monitor supply over time to identify trends in data quality. Ask suppliers tough questions about their panel recruitment and quality control measures – and put what they say they do to the test by implementing independent data quality checks.

4. Account for the whole project life cycle: Ensure that data quality measures are in place throughout the entire research process, including questionnaire design, fielding, analysis, and reporting.

5. Educate yourselves and your clients: Stay informed about the latest trends and threats in survey fraud, and be transparent with clients about the challenges and the steps being taken to address them.

The data quality crisis in market research is a critical issue that demands immediate attention and action from industry professionals. The consequences, including a loss of client confidence, hit the pocketbook in more ways than one.

Only by taking action and prioritizing the integrity of survey data can the industry restore trust and continue to provide valuable insights to clients in an increasingly challenging landscape.

![Data cleaning through the years [a funny yet true timeline]](https://dtect.io/wp-content/uploads/2025/04/20250326-20250326-dt-data-cleaning-through-the-years-hz-300x156.png)